import scipy as sp

import scipy.sparse as spsp

import scipy.sparse.linalg

import matplotlib.pyplot as plt

%matplotlib inline

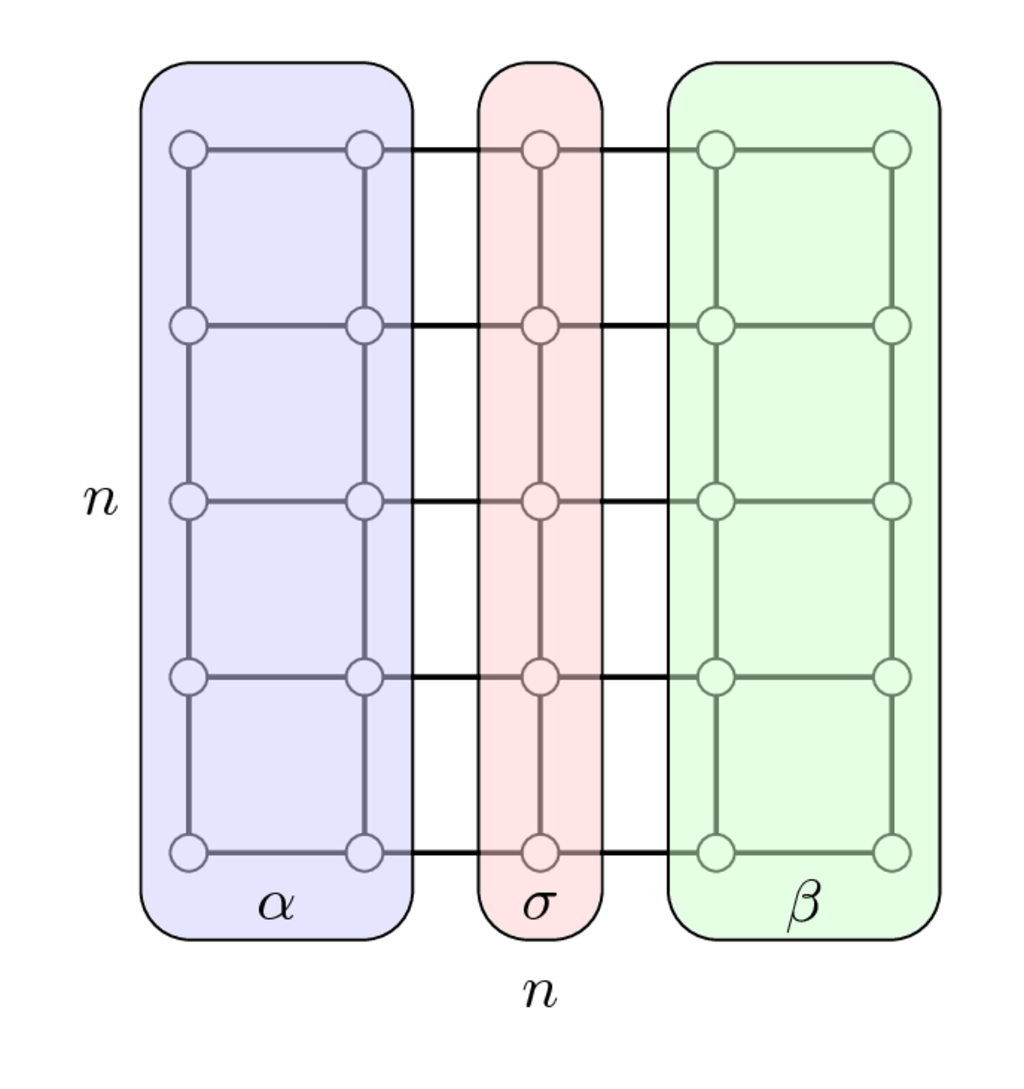

n = 20

ex = np.ones(n);

lp1 = sp.sparse.spdiags(np.vstack((ex, -2*ex, ex)), [-1, 0, 1], n, n, 'csr');

e = sp.sparse.eye(n)

A = sp.sparse.kron(lp1, e) + sp.sparse.kron(e, lp1)

A = spsp.csc_matrix(A)

T = spsp.linalg.splu(A)

plt.spy(T.L, marker='.', color='k', markersize=8)

#plt.spy(A, marker='.', color='k', markersize=8)

<matplotlib.lines.Line2D at 0x1192c9850>

and

and