Householder matrices¶

- Householder matrix is the matrix of the form

where $v$ is an $n \times 1$ column and $v^* v = 1$.

- Can you show that $H$ is unitary and Hermitian ($H^* = H$)?

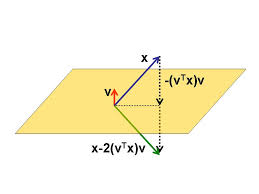

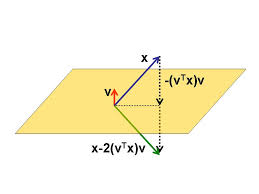

- It is also a reflection:

We use notation

$$A= \begin{bmatrix} a_{11} & \dots & a_{1m} \\ \vdots & \ddots & \vdots \\ a_{n1} & \dots & a_{nm} \end{bmatrix} \equiv \{a_{ij}\}_{i,j=1}^{n,m}\in \mathbb{C}^{n\times m}.$$$A^*\stackrel{\mathrm{def}}{=}\overline{A^\top}$.

Recall vector norms that allow to evaluate distance between two vectors or how large are elements of a vector.

How to generalize this concept to matrices?

A trivial answer is that there is no big difference between matrices and vectors, and here comes the simplest matrix norm –– Frobenius norm:

$\Vert \cdot \Vert$ is called a matrix norm if it is a vector norm on the vector space of $n \times m$ matrices:

Additionally some norms can satisfy the submultiplicative property

$\Vert A B \Vert \leq \Vert A \Vert \Vert B \Vert$

These norms are called submultiplicative norms.

The submultiplicative property is needed in many places, for example in the estimates for the error of solution of linear systems (we will cover this topic later).

Example of a non-submultiplicative norm is Chebyshev norm

where $\Vert \cdot \Vert_*$ and $\| \cdot \|_{**}$ are vector norms.

It is easy to check that operator norms are submultiplicative if $\|\cdot\|_* = \|\cdot\|_{**}$. Otherwise, it can be non-submultiplicative, think about example.

Frobenius norm is a matrix norm, but not an operator norm, i.e. you can not find $\Vert \cdot \Vert_*$ and $\| \cdot \|_{**}$ that induce it.

Important case of operator norms are matrix $p$-norms, which are defined for $\|\cdot\|_* = \|\cdot\|_{**} = \|\cdot\|_p$.

Among all $p$-norms three norms are the most common ones:

$p = 1, \quad \Vert A \Vert_{1} = \displaystyle{\max_j \sum_{i=1}^n} |a_{ij}|$.

$p = 2, \quad$ spectral norm, denoted by $\Vert A \Vert_2$.

$p = \infty, \quad \Vert A \Vert_{\infty} = \displaystyle{\max_i \sum_{j=1}^m} |a_{ij}|$.

Let us check it for $p=\infty$ on a blackboard.

where $\sigma_1(A)$ is the largest singular value of the matrix $A$ and $^*$ is a conjugate transpose of the matrix.

import jax.numpy as jnp

import jax

n = 100

m = 2000

a = jax.random.normal(jax.random.PRNGKey(0), (n, m)) #Random n x m matrix

s1 = jnp.linalg.norm(a, 2) #Spectral

s2 = jnp.linalg.norm(a, 'fro') #Frobenius

s3 = jnp.linalg.norm(a, 1) #1-norm

s4 = jnp.linalg.norm(a, jnp.inf)

print('Spectral: {0:} \nFrobenius: {1:} \n1-norm: {2:} \ninfinity: {3:}'.format(s1, s2, s3, s4))

Several examples of optimization problems where matrix norms arise:

where $\odot$ denotes Hadamard product (elementwise)

While norm is a measure of distance, the scalar product takes angle into account.

It is defined as

or it is said the norm is induced by the scalar product.

where $\mathrm{trace}(A)$ denotes the sum of diagonal elements of $A$. One can check that $\|A\|_F = \sqrt{(A, A)_F}$.

Remark. The angle between two vectors is defined as

$$ \cos \phi = \frac{(x, y)}{\Vert x \Vert_2 \Vert y \Vert_2}. $$Similar expression holds for matrices.

and thus the angle between two vectors is defined properly.

For stability it is really important that the error does not grow after we apply some transformations.

Suppose you are given $\widehat{x}$ –– the approximation of $x$ such that,

Let $U$ be complex $n \times n$ matrix, and $\Vert U z \Vert_2 = \Vert z \Vert_2$ for all $z$.

This can happen if and only if (can be abbreviated as iff)

where $I_n$ is an identity matrix $n\times n$.

which means that columns and rows of unitary matrices both form orthonormal basis in $\mathbb{C}^{n}$.

For rectangular matrices of size $m\times n$ ($n\not= m$) only one of the equalities can hold

In the case of real matrices $U^* = U^T$ and matrices such that

are called orthogonal.

Important property: a product of two unitary matrices is a unitary matrix:

$$(UV)^* UV = V^* (U^* U) V = V^* V = I,$$For vector 2-norm we have already seen that $\Vert U z \Vert_2 = \Vert z \Vert_2$ for any unitary $U$.

One can show that unitary matrices also do not change matrix norms $\|\cdot\|_2$ and $\|\cdot\|_F$, i.e. for any square $A$ and unitary $U$,$V$:

For $\|\cdot\|_2$ it follows from the definition of an operator norm and the fact that vector 2-norm is unitary invariant.

For $\|\cdot\|_F$ it follows from $\|A\|_F^2 = \mathrm{trace}(A^*A)$ and the fact that $\mathrm{trace}(BC) = \mathrm{trace}(CB)$.

There are two important classes of unitary matrices, using composition of which we can construct any unitary matrix:

Other important examples are

where $v$ is an $n \times 1$ column and $v^* v = 1$.

Proof (for real case). Let $e_1 = (1,0,\dots, 0)^T$, then we want to find $v$ such that

$$ H x = x - 2(v^* x) v = \alpha e_1, $$where $\alpha$ is an unknown constant. Since $\|\cdot\|_2$ is unitary invariant we get

$$\|x\|_2 = \|Hx\|_2 = \|\alpha e_1\|_2 = |\alpha|.$$and $$\alpha = \pm \|x\|_2$$

Also, we can express $v$ from $x - 2(v^* x) v = \alpha e_1$:

$$v = \dfrac{x-\alpha e_1}{2 v^* x}$$Multiplying the latter expression by $x^*$ we get

$$x^* x - 2 (v^* x) x^* v = \alpha x_1; $$or

$$ \|x\|_2^2 - 2 (v^* x)^2 = \alpha x_1. $$Therefore,

$$ (v^* x)^2 = \frac{\|x\|_2^2 - \alpha x_1}{2}. $$So, $v$ exists and equals

$$ v = \dfrac{x \mp \|x\|_2 e_1}{2v^* x} = \dfrac{x \mp \|x\|_2 e_1}{\pm\sqrt{2(\|x\|_2^2 \mp \|x\|_2 x_1)}}. $$Using the obtained property we can make arbitrary matrix $A$ lower triangular:

$$ H_2 H_1 A = \begin{bmatrix} \times & \times & \times & \times \\ 0 & \times & \times & \times \\ 0 & 0 & \boldsymbol{\times} & \times\\ 0 &0 & \boldsymbol{\times} & \times \\ 0 &0 & \boldsymbol{\times} & \times \end{bmatrix} $$then finding $H_3=\begin{bmatrix}I_2 & \\ & {\widetilde H}_3 \end{bmatrix}$ such that

$$ {\widetilde H}_3 \begin{bmatrix} \boldsymbol{\times}\\ \boldsymbol{\times} \\ \boldsymbol{\times} \end{bmatrix} = \begin{bmatrix} \times \\ 0 \\ 0 \end{bmatrix}. $$we get

$$ H_3 H_2 H_1 A = \begin{bmatrix} \times & \times & \times & \times \\ 0 & \times & \times & \times \\ 0 & 0 & {\times} & \times\\ 0 &0 & 0 & \times \\ 0 &0 & 0 & \times \end{bmatrix} $$Finding $H_4$ by analogy we arrive at upper-triangular matrix.

Since product and inverse of unitary matrices is a unitary matrix we get:

Corollary: (QR decomposition) Every $A\in \mathbb{C}^{n\times m}$ can be represented as

$$ A = QR, $$where $Q$ is unitary and $R$ is upper triangular.

See poster, what are the sizes of $Q$ and $R$ for $n>m$ and $n<m$.

which is a rotation.

only in the $i$-th and $j$-th positions:

$$ x'_i = x_i\cos \alpha - x_j\sin \alpha , \quad x'_j = x_i \sin \alpha + x_j\cos\alpha, $$with all other $x_i$ remain unchanged.

import jax.numpy as jnp

import matplotlib.pyplot as plt

%matplotlib inline

alpha = -3*jnp.pi / 4

G = jnp.array([

[jnp.cos(alpha), -jnp.sin(alpha)],

[jnp.sin(alpha), jnp.cos(alpha)]

])

x = jnp.array([-1./jnp.sqrt(2), 1./jnp.sqrt(2)])

y = G @ x

plt.quiver([0, 0], [0, 0], [x[0], y[0]], [x[1], y[1]], angles='xy', scale_units='xy', scale=1)

plt.xlim(-1., 1.)

plt.ylim(-1., 1.)

Similarly we can make matrix upper-triangular using Givens rotations:

$$\begin{bmatrix} \times & \times & \times \\ \bf{*} & \times & \times \\ \bf{*} & \times & \times \end{bmatrix} \to \begin{bmatrix} * & \times & \times \\ * & \times & \times \\ 0 & \times & \times \end{bmatrix} \to \begin{bmatrix} \times & \times & \times \\ 0 & * & \times \\ 0 & * & \times \end{bmatrix} \to \begin{bmatrix} \times & \times & \times \\ 0 & \times & \times \\ 0 & 0 & \times \end{bmatrix} $$SVD will be considered later in more details.

Theorem. Any matrix $A\in \mathbb{C}^{n\times m}$ can be written as a product of three matrices:

$$ A = U \Sigma V^*, $$where

Moreover, if $\text{rank}(A) = r$, then $\sigma_{r+1} = \dots = \sigma_{\min (m,n)} = 0$.

See poster for the visualization.

Most important matrix norms: Frobenius and spectral

Unitary matrices preserve these norms

There are two "basic" classes of unitary matrices: Householder and Givens matrices

from IPython.core.display import HTML

def css_styling():

styles = open("../styles/custom.css", "r").read()

return HTML(styles)

css_styling()